Fintech Horror Stories - The CFPB Riffs on AI

The regulators are coming.

Welcome back to another edition of Fintech Horror Stories! Before we go into the headline story, I’d be remiss if I didn’t acknowledge the flurry of regulatory activity that has been occurring in this past week. It seems like all the regulators had something to say this past week regarding fintech, and each were pretty monumental. Based on this, we’re going to do something unprecedented and use the weekend to reflect on this flurry of activity - today we’ll cover the CFPB’s take on AI, tomorrow we’ll tackle the SEC’s hard hits on crypto, and Sunday we’ll deep dive into the FDIC, OCC, and FRB’s revised third party risk management guidance. With that said, let’s dive into today’s focus, specifically taking a look at the CFPB’s latest thoughts on AI. I will also take this opportunity to say “I told you so.” :)

If you’re subscribed to the CFPB’s mailing list, as I am, you might have been surprised to get a somewhat oddly titled email from them entitled “The CFPB has entered the chat” this past Wednesday. Normally, emails from them include straightforward titles like “Credit bureau reporting companies should do more…” or “Why the largest credit card companies are…” or “Protecting People’s Access to their Money.” Naturally, I felt compelled to open the email immediately, and it included a link to the guidance that we’re going to discuss today. I’d be remiss if I didn’t mention that the CFPB also chimed in on AI in the context of home appraisals the previous week, so while we won’t cover that here, it’s a fair bet that this is just the first of what I expect to be numerous opinions being offered by them on the matter. What is it all leading to, though?

Setting the stage, based on my past experience, when the CFPB issues “spotlight” views like these, as does any regulator, these are not just mere “public service announcements.” There is a lot behind the page. It’s usually the first step in them giving a warning to banks, fintechs, and financial institutions that there is some behavior they’ve identified that is contrary to their interpretation of the law (typically based on complaints they’ve received from customers through their database, which we’ve covered frequently in this space). This is then typically followed by research, which can be conducted either through surveys or baselining studies that include sending out requests for information to banks and other institutions. After this, they will usually advocate for new laws/justify existing laws, or in other cases, set precedents by issuing bulletins/revisions as their rulemaking authority allows. Lastly, they will conduct examinations which can result in fines, penalties, bad press and in some cases enough bad news that a company has to shut down.

Given this is a spotlight and we’re in the early stages of the aforementioned lifecycle, what exactly is the CFPB hinting at here? They highlighted three main points from their analysis:

Financial institutions are increasingly using chatbots as a cost-effective alternative to human customer service.

Chatbots may be useful for resolving basic inquiries, but their effectiveness wanes as problems become more complex.

Financial institutions risk violating legal obligations, eroding customer trust, and causing consumer harm when deploying chatbot technology.

They then provide a perspective of chatbots in consumer finance, and do a nice job of providing some history, talking about how customer service has evolved from face to face banking at branches, to call centers, to web and mobile banking, to where it is now with bots. We get some explanation of chatbots and how they work, how some deploy AI and some don’t, as well as they industries they have been deployed in along with financial services. Next, they discuss some of the history and adoption of chatbots by banks and fintechs. This particular section is pretty helpful to anyone who isn’t super plugged into the activities of the big banks, and what I found most valuable was to learn about some of the third party vendors that are powering the chatbots behind some of the biggest banks:

This part of the spotlight says so much without actually saying it - if I’m a consumer, I should read that information and understand that the next time I have a negative interaction using a bot, I now have some additional insight behind exactly which institution is to blame for screwing things up, beyond just the bank. If I’m the financial institution, I should recognize the CFPB is putting me on notice to improve my third party risk management (which we’ll talk more about on Sunday). And if I’m the vendor, I should start sweating because it means I’m probably in the crosshairs of the regulators even if I thought I wasn’t before.

The most powerful part of the spotlight follows, which is based on the complaints that this newsletter is dedicated to (along with some other data, but for the sake of expediency we’ll focus on complaints). The CFPB plays it nice for the sake of the institution by not mentioning the specific bank in the paper, but they link to the complaint which allows a more curious reader to learn who was the offender. Since we aren’t the CFPB, we decided to summarize the complaints highlighted regarding the use of chatbots, along with actually identifying the institutions (it’s interesting to see who gets called out the most, namely Citi and PayPal):

Difficulties in recognizing and resolving disputes - Citi, PayPal

Failure to provide meaningful customer assistance - Credit Collection Services, Bread Financial

Hindering access to timely human intervention - PayPal, Experian

System reliability and downtime - Citi, TransUnion

The third one, “hindering access to timely human intervention,” is pretty significant, in particular this statement:

“Deploying advanced technologies instead of humans may also be an intentional choice by entities seeking to grow revenue or minimize write offs. Indeed, advanced technologies may be less likely to waive fees, or to be open to negotiation on price.”

In essence, the CFPB is saying that some companies may have deployed chatbots because they will be less likely to provide any “second chances” or “wiggle room” on things like late fees or interest rates, and in turn will boost their bottom line/revenue. Wow! Yeah, they just went there. I wonder if PayPal, Citi, or the others plan to respond to this because the cynical implication is pretty damning - that these companies are advertising chatbots as something convenient when they are actually using them to boost late fee revenue, interest income, and save time/expenses that were previously invested in human-centered customer service.

In fairness to the companies mentioned, I do wonder whether the customers who complained here utilized alternative methods of contact. I can’t imagine that most of the names mentioned here didn’t have options to talk to a human, whether through messaging apps or phone calls. Perhaps smaller/lesser known firms, like CCS (which is a debt collection agency), may actually have that problem, as noted by the complaint the CFPB cites. But for the others, they most certainly have a multi-channel servicing approach. Now whether the quality of the service is any good is a whole different question, and you can argue that even before chatbots/AI there have been clear indications from these institutions and others that customer service quality isn’t a priority (as evidenced, for example, by the trend of North American financial institutions outsourcing customer service to foreign countries where agents may not have experience with the product or may not proficiently speak the language of the country they are servicing).

The CFPB closes up the piece by listing the key risks it sees if the current state of financial service chatbots continues without some changes:

Noncompliance with federal consumer financial laws

Diminished customer service and trust when chatbots reduce access to individualized human support agents

Harming people

So with all that said - I thought I’d share my thoughts on the above. As always, the reality of the situation isn’t as black and white as the CFPB (or the institutions deploying chatbots) would have you believe. I don’t actually believe that there is a willful intent to screw over customers by creating chatbots and I doubt that executives are sitting in a corner in some dark room gleefully lining their pockets with tons of money made through charging interest and fees created as a result of diverting customers from human agents to chatbots. On the other hand, I also don’t believe that the current chatbots are of any meaningful use - in my own experience, I can tell you that 99% of the time even if I’m using a messaging service, I want to talk to a real human being and I will usually try to get right to an actual agent.

And what’s left unsaid in the spotlight piece is that very few, if any, financial institutions have actually deployed the newest models of generative AI in customer servicing (although it does mention that some of the firms/banks have banned their employees from using some of them). While the article talks about LLMs (large language models) and even mentions ChatGPT a few times, what makes the current batch of LLMs different (from the legacy ones powering the chatbots that this piece is focused on) is the sheer power behind them and the ability to quickly parse data in a way that was not possible before, as well as (especially with GPT-4) the ability to ingest and output images and even video. However, as with all the issues that are currently being grappled with in AI generally - specifically accuracy, harmful/offensive responses, and privacy/security - these are all a reflection of the humans behind them (or the lack of humans, for that matter).

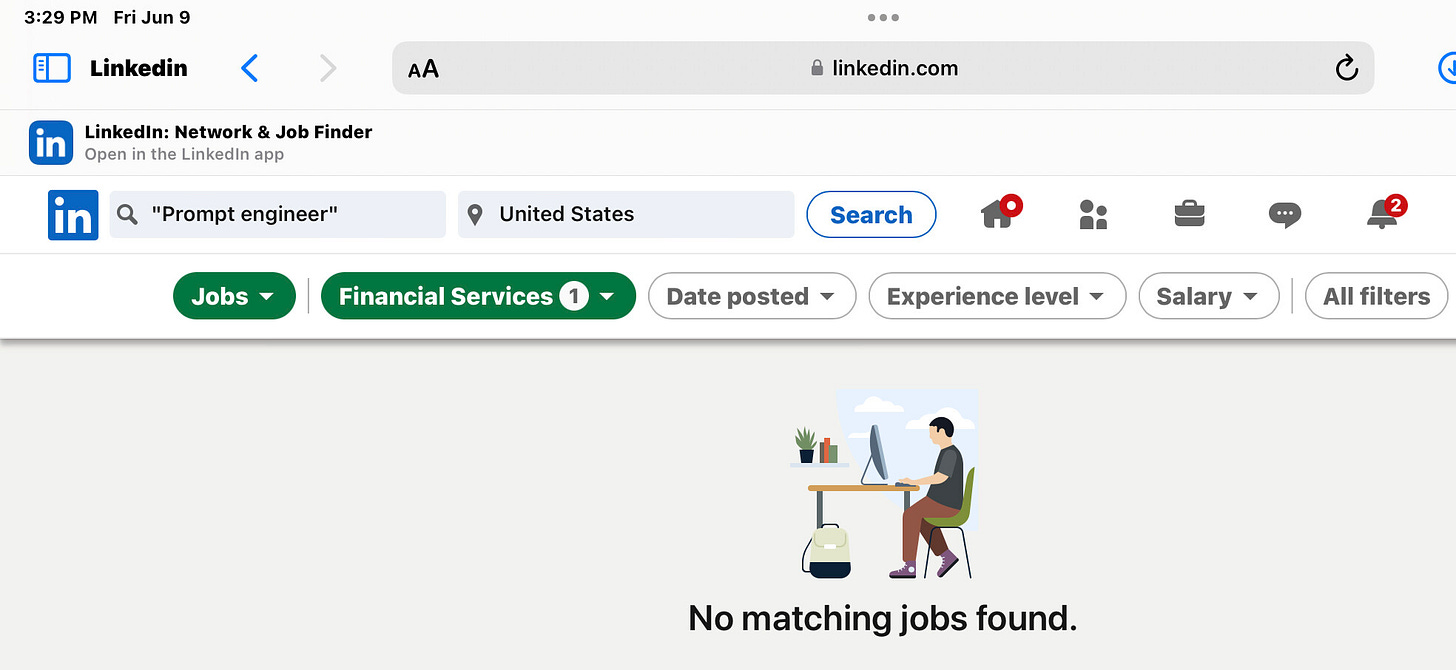

Perhaps then, I can add on to what the CFPB has said and observe that the real issue plaguing the banks, fintechs, and FIs, is that they don’t have the right talent in place. Not necessarily that those who are already involved are doing anything malicious, but that there’s a knowledge deficit and a pure numbers deficit. And what are they doing about it?

Kind of says it all, doesn’t it?

See you tomorrow.